The K-nearest neighbors (KNN) algorithm is a simple, easy-to-implement supervised machine learning algorithm that can be used to solve both classification and regression problems.

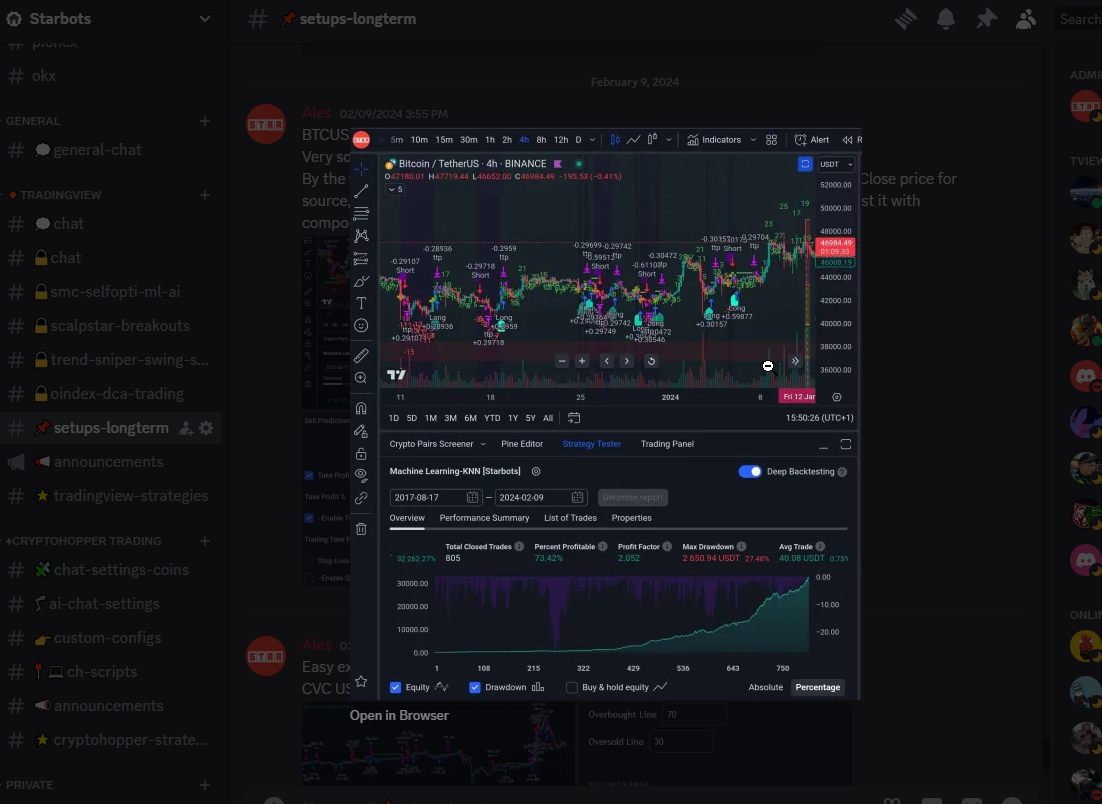

https://www.tradingview.com/script/vSXaRNzM-Machine-Learning-KNN-with-Multiple-Indicators-Starbots/

The KNN algorithm assumes that similar things exist in close proximity. In other words, similar things are near to each other. KNN captures the idea of similarity (sometimes called distance, proximity, or closeness) with some mathematics we might have learned in our childhood— calculating the distance between points on a graph to predict the next move.

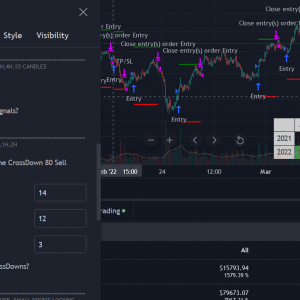

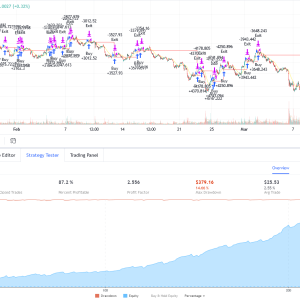

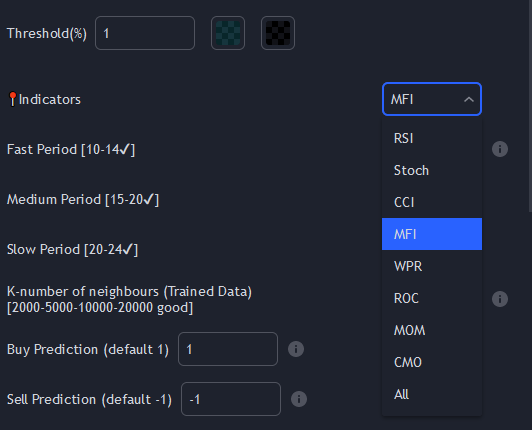

This three dimensional kNN algorithm has a look on what has happened in the past when the indicators with 3 different periods had a similar level. It then looks at the k nearest neighbours, sees their state and classifies the current point.

Understand KNN Machine learning > from https://www.analyticsvidhya.com/blog/2018/03/introduction-k-neighbours-algorithm-clustering/

How Does the KNN Algorithm Work?

Let’s take a simple case to understand this algorithm. Following is a spread of red circles (RC) and green squares (GS):

You intend to find out the class of the blue star (BS). BS can either be RC or GS and nothing else. The “K” in KNN algorithm is the nearest neighbor we wish to take the vote from. Let’s say K = 3. Hence, we will now make a circle with BS as the center just as big as to enclose only three data points on the plane. Refer to the following diagram for more details:

![scenario2 [KNN Algorithm]](https://av-eks-blogoptimized.s3.amazonaws.com/scenario2.png)

The three closest points to BS are all RC. Hence, with a good confidence level, we can say that the BS should belong to the class RC. Here, the choice became obvious as all three votes from the closest neighbor went to RC. The choice of the parameter K is very crucial in this algorithm. Next, we will understand the factors to be considered to conclude the best K.

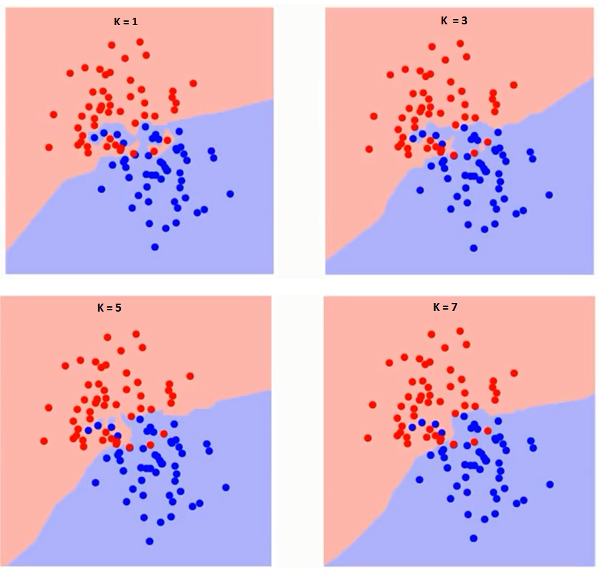

How Do We Choose the Factor K?

First, let us try to understand exactly the K influence in the algorithm. If we see the last example, given that all the 6 training observation remain constant, with a given K value we can make boundaries of each class. These decision boundaries will segregate RC from GS. In the same way, let’s try to see the effect of value “K” on the class boundaries. The following are the different boundaries separating the two classes with different values of K.

![K judgement [KNN Algorithm]](https://av-eks-blogoptimized.s3.amazonaws.com/K-judgement.png)

![K judgement2 [KNN Algorithm]](https://av-eks-blogoptimized.s3.amazonaws.com/K-judgement2.png)

If you watch carefully, you can see that the boundary becomes smoother with increasing value of K. With K increasing to infinity it finally becomes all blue or all red depending on the total majority. The training error rate and the validation error rate are two parameters we need to access different K-value. Following is the curve for the training error rate with a varying value of K :

As you can see, the error rate at K=1 is always zero for the training sample. This is because the closest point to any training data point is itself.Hence the prediction is always accurate with K=1. If validation error curve would have been similar, our choice of K would have been 1. Following is the validation error curve with varying value of K:

![training error_1 [KNN Algorithm]](https://av-eks-blogoptimized.s3.amazonaws.com/training-error_11.png)

This makes the story more clear. At K=1, we were overfitting the boundaries. Hence, error rate initially decreases and reaches a minima. After the minima point, it then increase with increasing K. To get the optimal value of K, you can segregate the training and validation from the initial dataset. Now plot the validation error curve to get the optimal value of K. This value of K should be used for all predictions.

All Star strategies use dynamic alerts

By using Star Bots Trading Algorithms, you agree with the terms of EULA and consent to be bound by them. You use the Trading Algorithm at your behalf and at your own risk. Star Bots is not responsibile or liable for your’s direct or indirect losses, damages, costs and expenses. You are not allowed to edit, copy, reproduce, distribute, resell, use the Trading Algorithm for signalling services, copy-trading or otherwise use the Trading Algorithm for your own or other’s interests or for any commercial purpose. If such or any other breach of EULA occur, you’re contract will be terminated immediately and you are obligated to stop all access and use of this Strategy.

![scenario1 [KNN Algorithm]](https://av-eks-blogoptimized.s3.amazonaws.com/scenario1.png)

![training error [KNN Algorithm]](https://av-eks-blogoptimized.s3.amazonaws.com/training-error.png)